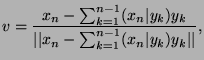

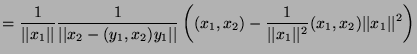

The theorem would state that given any sequence of ![]() linearly independent vectors

linearly independent vectors ![]() , there is one

and only one sequence of orthonormal vectors

, there is one

and only one sequence of orthonormal vectors ![]() such that:

such that:

Let us prove it by induction on ![]() , the number of initial linearly vectors.

, the number of initial linearly vectors.

If ![]() , the theorem is obvious and the single vector sought is

, the theorem is obvious and the single vector sought is

![]() .

.

We now suppse the theorem is true for ![]() . We consider an initial sequence of

. We consider an initial sequence of ![]() linearly independent

vectors

linearly independent

vectors ![]() . By the inductive hypothesis we already have a sequence of vectors

. By the inductive hypothesis we already have a sequence of vectors ![]() such that:

such that:

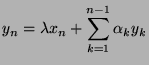

If there is a sequence of ![]() orthornormalized vectors

orthornormalized vectors ![]() such that:

such that:

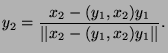

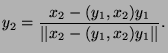

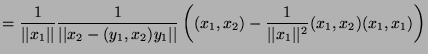

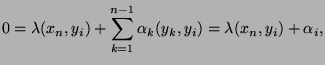

Analysis: If such a ![]() exist, then

exist, then

![]() .

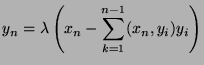

Therefore,

.

Therefore,

Synthesis: If we just take a closer look at: